-

KOSPI 2812.05 +41.21 +1.49%

-

KOSDAQ 756.23 +6.02 +0.80%

-

KOSPI200 376.54 +6.64 +1.80%

-

USD/KRW 1373 3.00 -0.22%

SK Hynix, Samsung set to benefit from explosive HBM sales growth

Korean chipmakers

SK Hynix, Samsung set to benefit from explosive HBM sales growth

TrendForce expects HBM prices to rise 5-10% in 2025, accounting for 30% of total DRAM value by then

By

May 07, 2024 (Gmt+09:00)

3

Min read

News+

Sales of high-bandwidth memory (HBM), the red-hot DRAM product essential to artificial intelligence devices, will significantly grow in the coming years, benefiting market leaders such as SK Hynix Inc. and Samsung Electronics Co.

According to Taiwan-based market researcher TrendForce, the HBM market is poised for robust growth, driven by significant pricing premiums and increased capacity needs for AI chips.

HBM's unit sales price is several times higher than that of conventional DRAM and about five times that of double data rate 5 or DDR5 chips.

This pricing, combined with new AI product launches, is expected to dramatically raise HBM’s share in both the capacity and market value of the DRAM market through 2025, it said.

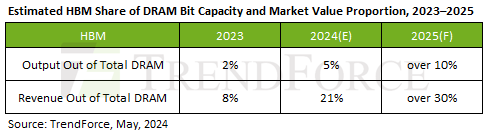

“HBM’s share of total DRAM bit capacity is estimated to rise from 2% in 2023 to 5% in 2024 and surpass 10% by 2025,” Avril Wu, senior research vice president at TrendForce, said in a research note.

In terms of market value, HBM is projected to account for more than 20% of the total DRAM market starting in 2024, potentially exceeding 30% by 2025, she said.

Last year, HBM accounted for 8% of the entire DRAM market in value.

2025 HBM PRICE NEGOTIATIONS ALREADY COMMENCED

Wu said 2025 HBM pricing negotiations have already begun in the current quarter.

However, due to the limited overall DRAM capacity, suppliers have preliminarily increased prices by 5–10% to manage capacity constraints, affecting AI chips such as HBM2E, HBM3 and HBM3E, she said.

This early negotiation phase is due to three main factors, according to TrendForce.

First, HBM buyers maintain high confidence in AI demand prospects and are willing to accept continued price increases.

Secondly, the yield rates for HBM3E chips manufactured with the through silicon via (TSV) process currently range only between 40% and 60%, with room for improvement.

Moreover, not all major suppliers have passed customer qualifications for HBM3E, leading buyers to accept higher prices to secure stable and quality supplies, Wu said.

Thirdly, future per-gigabit (Gb) pricing may vary depending on DRAM suppliers' reliability and supply capabilities, which could create disparities in average sales prices and, consequently, impact HBM makers’ profitability, she said.

MIGRATION TO HBM3E TO BENEFIT SK, SAMSUNG

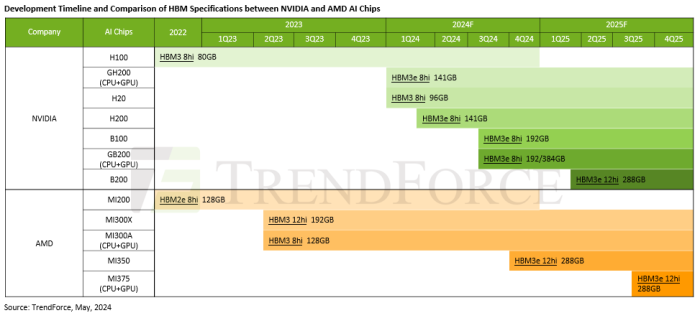

Looking ahead to 2025, from the perspective of major AI solution providers, there will be a significant shift in HBM specification requirements toward HBM3E, with an increase in 12-layer stack products anticipated, said TrendForce. This shift, it added, is expected to drive up the capacity of HBM per chip.

The market researcher said the annual HBM demand growth rate will approach 200% in 2024 and is expected to double in 2025.

Analysts said robust HBM demand growth will lead to increased sales and improved profitability for top HBM players such as SK Hynix and Samsung.

SK Hynix Chief Executive Kwak Noh-jung said last week that it is almost fully booked through next year in terms of its capacity to make HBM chips.

To solidify SK’s HBM market leadership, he said, the company plans to provide samples of its next-generation 12-layer HBM3E chip to clients in May and begin mass production in the third quarter, ahead of schedule.

Kim Jae-june, Samsung’s memory business vice president, said in late April that the company had begun mass production of its HBM chips for generative AI chipsets, called 8-layer HBM3E, and will see sales revenue from the chips from the end of the second quarter.

NVIDIA, AMD’S NEW AI CHIPS

The Samsung executive also said it plans to start making the fifth-generation 12-layer version as early as the second quarter.

Among memory chipmakers, SK Hynix is the biggest beneficiary of the explosive increase in AI adoption, as it dominates production of HBM, critical for generative AI computing and is the top supplier of AI chips to Nvidia Corp., which controls 80% of the AI chip market.

Samsung, which vows to triple its HBM output this year, is eager to pass quality testing currently underway by Nvidia.

HBM3E is expected to power Nvidia’s new AI chips such as B100 and GB200 and AMD’s MI350 and MI375, which are set for launch later this year.

Write to Chae-Yeon Kim at why29@hankyung.com

In-Soo Nam edited this article.

More To Read

-

Korean chipmakersSamsung sets up team to win AI chip deal from Nvidia

Korean chipmakersSamsung sets up team to win AI chip deal from NvidiaMay 06, 2024 (Gmt+09:00)

-

Korean chipmakersSK Hynix’s HBM chip orders fully booked; 12-layer HBM3E in Q3: CEO

Korean chipmakersSK Hynix’s HBM chip orders fully booked; 12-layer HBM3E in Q3: CEOMay 02, 2024 (Gmt+09:00)

-

Korean chipmakersSamsung shifts gears to focus on HBM, server memory chips

Korean chipmakersSamsung shifts gears to focus on HBM, server memory chipsApr 30, 2024 (Gmt+09:00)

-

Korean chipmakersSK Hynix, TSMC tie up to stay ahead of Samsung for HBM supremacy

Korean chipmakersSK Hynix, TSMC tie up to stay ahead of Samsung for HBM supremacyApr 19, 2024 (Gmt+09:00)

-

Korean chipmakersSamsung to unveil Mach-1 AI chip to upend SK Hynix’s HBM leadership

Korean chipmakersSamsung to unveil Mach-1 AI chip to upend SK Hynix’s HBM leadershipMar 20, 2024 (Gmt+09:00)

-

Korean chipmakersSamsung set to triple HBM output in 2024 to lead AI chip era

Korean chipmakersSamsung set to triple HBM output in 2024 to lead AI chip eraMar 27, 2024 (Gmt+09:00)

-

Mar 22, 2024 (Gmt+09:00)