-

KOSPI 2577.27 -2.21 -0.09%

-

KOSDAQ 722.52 -7.07 -0.97%

-

KOSPI200 341.49 +0.02 +0.01%

-

USD/KRW 1396 -2.00 0.14%

Samsung to launch low-power, high-speed AI chips in 2024

Korean chipmakers

Samsung to launch low-power, high-speed AI chips in 2024

It will also unveil advanced three-dimensional (3D) chip packaging next year

By

Nov 21, 2023 (Gmt+09:00)

2

Min read

News+

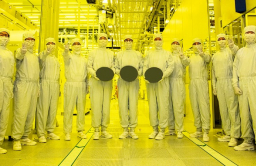

Samsung Electronics Co. will launch low latency wide (LLW) DRAMs next year, designed to improve the power efficiency of artificial intelligence applications by 70% more than that of regular DRAMs, the company said at its annual investors forum last week.

LLW DRAMs will become its flagship, next-generation chips and be embedded into AI devices such as extended reality headsets. Samsung aims to beef up artificial intelligence chip foundry sales to about 50% of its total foundry sales in five years.

The new AI chips will enhance data processing speed and capacity by increasing the number of input/output terminals (I/O) in a semiconductor circuit, compared with existing DRAMs.

3D PACKAGING

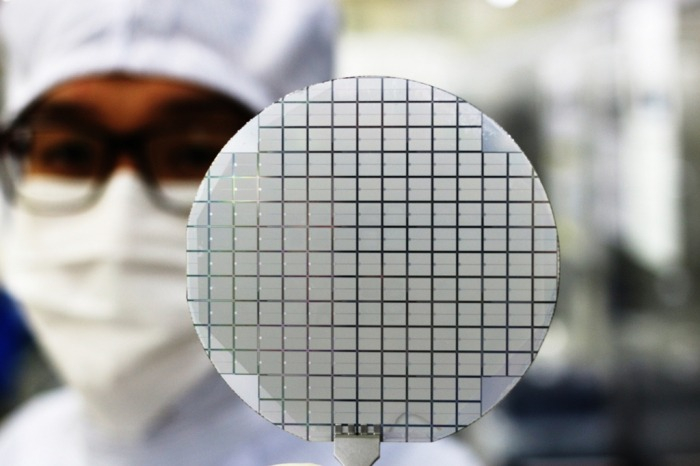

Next year, the world’s No. 1 memory chipmaker will unveil an advanced three-dimensional (3D) chip packaging technology, including the most advanced 3.5D packaging, the company said during the IR event.

It increases the processing speed and data processing capacity of electronic devices by stacking processors such as the central processing unit (CPU) and graphics processing unit (GPU) vertically with high-bandwidth memory (HBM) chips.

3 NM PROCESS

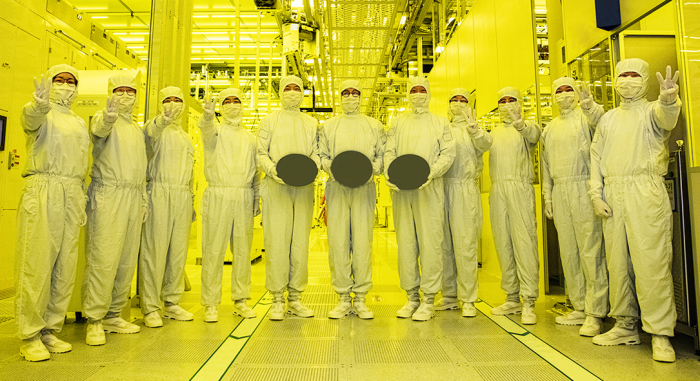

Samsung also will further sharpen its 3-nanometer chip processing technology, currently the industry’s smallest and most advanced process node, to be suitable for AI applications.

Last year, it began the mass production of 3 nm chips for fabless clients as a global first and ahead of Taiwan’s TSMC Co.

Earlier this year, Samsung was said to have significantly advanced the production yields of its first-generation 3 nm process technology to “a perfect level.”

“We can increase (memory chips’) performance by 2.2 times every two years,” said Jeong Ki-bong, vice president of Samsung Foundry, the foundry semiconductor business of Samsung Electronics, told investors at the IR event.

GDP STRATEGY

At the investors forum, Samsung presented the initials of GAA, dram and packaging, or GDP, as the keyword for its new strategy.

GAA, short for gate-all-around, reduces the leakage current of processors with a circuit width of 3 nm or below. It is Samsung’s key architecture to develop next-generation DRAMs and packaging technology.

The 3 nm technology is the first process node on which Samsung applied its first-generation GAA structure transistors.

IN COLLABORATION WITH TESLA

Samsung is working with Tesla Inc. to develop the EV maker’s next-generation Full Self-Driving (FSD) chips for Level-5 autonomous driving vehicles.

Samsung recently hired YoonJung Ahn, a design head at Waymo, Alphabet’s autonomous driving technology unit, as executive vice president to lead the design management center.

The chipmaker also plans to develop a 4 nm AI accelerator, a high-performance computing machine used to process AI workloads.

According to market research firm Omdia, the AI semiconductor market, estimated at $55.3 billion this year, is expected to reach $112 billion by 2027.

Write to Jeong-Soo Hwang and Ik-Hwan Kim at hjs@hankyung.com

Yeonhee Kim edited this article.

More To Read

-

Korean chipmakersSamsung Electronics to up AI chip foundry sales to 50% by 2028

Korean chipmakersSamsung Electronics to up AI chip foundry sales to 50% by 2028Nov 20, 2023 (Gmt+09:00)

-

Korean chipmakersSamsung to unveil 3D AI chip packaging tech SAINT to rival TSMC

Korean chipmakersSamsung to unveil 3D AI chip packaging tech SAINT to rival TSMCNov 12, 2023 (Gmt+09:00)

-

Artificial intelligenceOn-device AI chips: New battleground for chipmakers

Artificial intelligenceOn-device AI chips: New battleground for chipmakersNov 05, 2023 (Gmt+09:00)

-

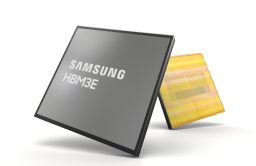

Korean chipmakersSamsung showcases HBM3E DRAM, automotive chips

Korean chipmakersSamsung showcases HBM3E DRAM, automotive chipsOct 21, 2023 (Gmt+09:00)

-

Korean chipmakersSamsung Elec to launch HBM4 in 2025 to win war in AI sector

Korean chipmakersSamsung Elec to launch HBM4 in 2025 to win war in AI sectorOct 10, 2023 (Gmt+09:00)

-

Korean chipmakersSamsung set to keep supremacy in low power DRAM with game changer

Korean chipmakersSamsung set to keep supremacy in low power DRAM with game changerSep 26, 2023 (Gmt+09:00)

-

Korean chipmakersSamsung set to supply HBM3, packaging services to AMD

Korean chipmakersSamsung set to supply HBM3, packaging services to AMDAug 22, 2023 (Gmt+09:00)

-

Korean chipmakersSamsung Elec to provide HBM3, packaging service to Nvidia

Korean chipmakersSamsung Elec to provide HBM3, packaging service to NvidiaAug 01, 2023 (Gmt+09:00)

-

Korean chipmakersSamsung to make 3 nm chips for Nvidia, Qualcomm, IBM, Baidu

Korean chipmakersSamsung to make 3 nm chips for Nvidia, Qualcomm, IBM, BaiduNov 22, 2022 (Gmt+09:00)

-

Korean chipmakersSamsung's 3 nm chip narrows microchip gap with TSMC

Korean chipmakersSamsung's 3 nm chip narrows microchip gap with TSMCJun 30, 2022 (Gmt+09:00)