-

KOSPI 2577.27 -2.21 -0.09%

-

KOSDAQ 722.52 -7.07 -0.97%

-

KOSPI200 341.49 +0.02 +0.01%

-

USD/KRW 1396 -2.00 0.14%

Samsung, SK Hynix join forces with foreign rivals to win AI chip war

Korean chipmakers

Samsung, SK Hynix join forces with foreign rivals to win AI chip war

Chiplet, CXL and customized high-performance chips are expected to lead the global chip market’s growth in the AI era

By

Dec 31, 2023 (Gmt+09:00)

4

Min read

News+

South Korea’s two chip giants Samsung Electronics Co. and SK Hynix Inc. are expected to further deepen ties with rivals and customers from around the world in the new year to advance their chiplet, computer express link (CXL) and customized chip technologies to win the artificial intelligent chip battle.

They are the core chip technologies whose demand is set to rise at an exponential rate following the arrival of generative AI, which has ushered in a new AI era.

Earlier this month, Advanced Micro Devices Inc. (AMD) unveiled its new AI accelerator, the Instinct MI300X, which adopts chiplet designs.

CHIPLET MARKET TO BURGEON

Chiplet design combines different functional units, such as a graphics processing unit (GPU), a central processing unit (CPU) and an I/O, in a package. This allows the assembly of third-party chips produced in different nodes like 7-nanometer GPU, a CPU built from a 4-nm node and an I/O from a 14-nm node.

This is similar to the system-on-chip (SoC) design, which stacks different functional chips on a die.

The SoC chip has been popular until recently but it is becoming costlier to fit multiple functions in an SoC due to the rapid advancement in miniaturization to sub-7nm.

As the die sizes of CPU and GPU for servers become larger, the production yields also fall accordingly, further hiking SoC manufacturing costs.

This is why chipmakers have shifted their focus to chiplets, which allow mixing and matching components from different producers together, offering chip suppliers better yields and lower costs.

As the chiplet has become a mega trend in the global chip industry, Samsung Electronics allied last year with its rivals and customers such as AMD, Intel Corp. and Taiwan Semiconductor Manufacturing Company Ltd. (TSMC) to establish a chiplet ecosystem.

The Korean chip giant also launched the multi-die integration (MDI) Alliance in collaboration with its partner companies as well as major players in memory, substrate packaging and testing to address the rapid growth in the chiplet market for mobile and HPC applications.

According to MarketsandMarkets Research, the global chiplet market is forecast to jump to $148.0 billion in 2028 from $6.5 billion in 2023 at a compound annual growth rate (CAGR) of 86.7%.

CXL

Competition to take the lead in CXL technology is also getting fiercer.

CXL is a unified interface standard that connects various processors, such as CPUs, GPUs and memory devices.

It is considered one of the next-generation memory solutions because it enables high-speed, low-latency communication between the host processor and devices, according to Samsung Electronics.

As the generative AI boom has sharply increased the amount of data to process, the demand for massive computing scaleup and high-responsive data communication is growing rapidly.

In response to this, Intel has formed a coalition with Samsung Electronics and SK Hynix to come up with CXL, which can speed up data processing by two-fold.

As it is built on a dynamic random-access memory (DRAM) module, its adaptation is expected to increase memory demand.

The race to develop CXL DRAM is gaining traction.

Samsung Electronics last week announced that it has verified the interoperability of its CXL memory in Red Hat Enterprise Linux (RHEL) 9.3, an enterprise Linux operating system (OS) developed by Red Hat Inc., a US software company.

It aims to mass-produce CXL memory products in 2024.

The global CXL market is forecast to grow to $15 billion in 2028, according to market intelligence company Yole Intelligence.

CUSTOMIZED CHIPS

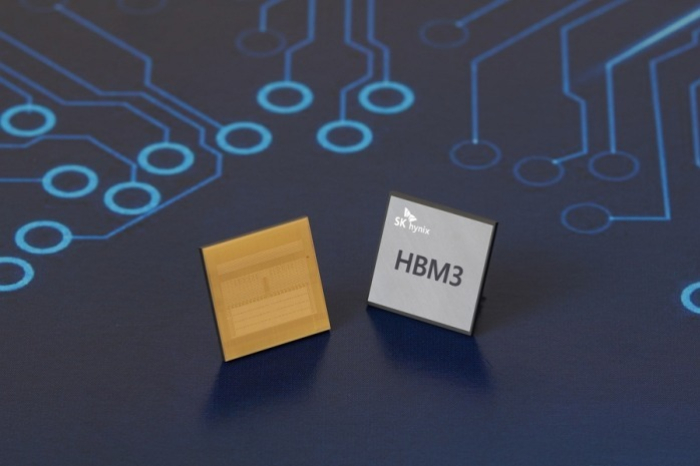

The Korean chip giants also pin high hopes on customized chips such as high bandwidth memory (HBM), the demand of which is set to surge this year.

HBM is high-value, high-performance memory that vertically interconnects multiple DRAM chips, dramatically increasing data processing speed compared with earlier DRAM products.

Its demand is growing in the new AI era as such chips power generative AI devices that operate on high-performance computing systems.

AI accelerator producers such as US fabless chip designer Nvidia Corp., AMD and Intel are actively seeking to secure HBM chips for their processors. Their combined pre-orders are already estimated at about 1 trillion won ($770 million).

To stay ahead of its rivals, SK Hynix has forged a partnership with Nvidia to develop customized HBM chips. Meanwhile, Samsung and AMD are deepening their ties.

Foundry players have also joined the race and seek to advance their fabrication process to win customized high-performance chip orders from big customers such as Google and Amazon.com.

Write to Jeong-Soo Hwang at hjs@hankyung.com

Sookyung Seo edited this article.

More To Read

-

Korean chipmakersSamsung Elec moves a step closer to CXL commercialization

Korean chipmakersSamsung Elec moves a step closer to CXL commercializationDec 27, 2023 (Gmt+09:00)

-

Korean chipmakersSamsung, SK pin hopes on HBM sales with Nvidia's new AI chip

Korean chipmakersSamsung, SK pin hopes on HBM sales with Nvidia's new AI chipNov 14, 2023 (Gmt+09:00)

-

Korean chipmakersSK Hynix bets on DRAM upturn with $7.6 bn spending; HBM in focus

Korean chipmakersSK Hynix bets on DRAM upturn with $7.6 bn spending; HBM in focusNov 09, 2023 (Gmt+09:00)

-

Korean chipmakersSK Hynix showcases cutting-edge CXL, AiM memory at OCP Summit

Korean chipmakersSK Hynix showcases cutting-edge CXL, AiM memory at OCP SummitOct 20, 2023 (Gmt+09:00)

-

Korean chipmakersSamsung Elec to launch HBM4 in 2025 to win war in AI sector

Korean chipmakersSamsung Elec to launch HBM4 in 2025 to win war in AI sectorOct 10, 2023 (Gmt+09:00)

-

Korean chipmakersHBM market to nearly double; next-gen DRAM to revive demand: KIW

Korean chipmakersHBM market to nearly double; next-gen DRAM to revive demand: KIWSep 11, 2023 (Gmt+09:00)

-

Korean chipmakersSK Hynix, Samsung's fight for HBM lead set to escalate on AI boom

Korean chipmakersSK Hynix, Samsung's fight for HBM lead set to escalate on AI boomSep 03, 2023 (Gmt+09:00)