-

KOSPI 2577.27 -2.21 -0.09%

-

KOSDAQ 722.52 -7.07 -0.97%

-

KOSPI200 341.49 +0.02 +0.01%

-

USD/KRW 1396 -2.00 0.14%

Upstage's AI beats global peers in benchmark math tests

Artificial intelligence

Upstage's AI beats global peers in benchmark math tests

Its MathGPT outperformed Microsoft's ToRA math-specific large language model in two global tests

By

Jan 08, 2024 (Gmt+09:00)

1

Min read

News+

South Korean artificial intelligence tech startup Upstage said on Monday that its math-specific large language model (LLM), jointly developed with local startup Masspresso and telecom leader KT Corp., has outperformed Microsoft Corp.’s ToRA in two global math benchmark tests.

Upstage’s MathGPT achieved 0.488 out of a full score of 1 in the latest MATH benchmark test for LLMs having 13 billion parameters or less. The test is based on a dataset of 12,500 challenging math problems.

The Korean model outperformed OpenAI's LLM GPT-4, which scored 0.425, chatbot ChatGPT's 0.355 and ToRA's 0.481, Upstage said.

In the GSM8K benchmark, or Grade School Math 8K, MathGPT topped the LLM list. The Korean AI scored 0.782, beating ToRA’s 0.758. The benchmark is based on a dataset of 8,500 high quality, linguistically diverse grade school math word problems.

Math has been a difficult field in which to apply LLMs due to the need for logical reasoning and abstract thinking.

Upstage has been developing the math-specific LLM with Masspresso, the operator of the AI-backed learning platform Qanda, since last year. This is part of the two AI startups’ partnership with KT, which last September invested 10 billion won ($7.6 million) in each of the tech ventures to strengthen its hyperscale AI capabilities.

Masspresso, which collects around 10 million data on math problems and explanations per day, has provided Upstage with the dataset.

KT operates Korea’s largest graphics processing unit (GPU) farm, a set of servers that allocate resources to quickly perform calculations, to accelerate the two startups’ math-specific LLM development.

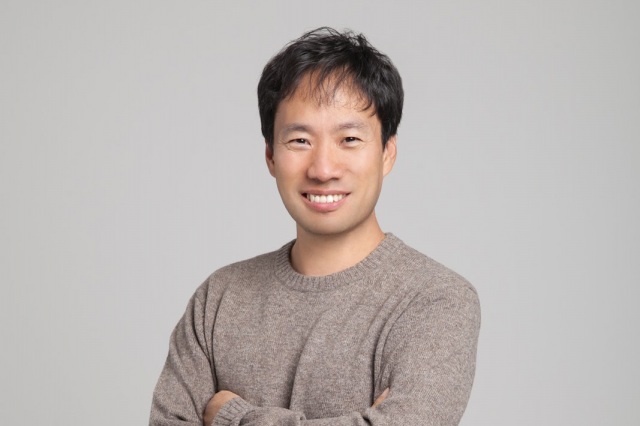

Upstage will lead the innovation of generative AI in math and other domains with its global top LLM tech, said Chief Executive Kim Seong-hoon.

AI in the global edtech industry, which has been at the level of Google search, will be upgraded with MathGPT, said a Quanda official.

Write to Kang-Ho Jang at autonomy@hankyung.com

Jihyun Kim edited this article.

More To Read

-

Artificial intelligenceUpstage to develop math-specific LLM with Qanda

Artificial intelligenceUpstage to develop math-specific LLM with QandaNov 15, 2023 (Gmt+09:00)

-

Artificial intelligenceUpstage to develop shopping-specific AI with ConnectWave

Artificial intelligenceUpstage to develop shopping-specific AI with ConnectWaveSep 12, 2023 (Gmt+09:00)

-

Artificial intelligenceS.Korea's KT invests in domestic startups Upstage, Qanda

Artificial intelligenceS.Korea's KT invests in domestic startups Upstage, QandaSep 11, 2023 (Gmt+09:00)

-

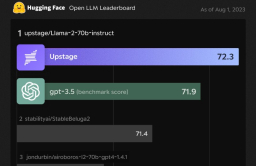

Artificial intelligenceS.Korean LLM by Upstage beats global benchmark ChatGPT

Artificial intelligenceS.Korean LLM by Upstage beats global benchmark ChatGPTAug 01, 2023 (Gmt+09:00)

-

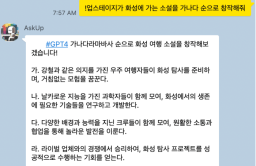

Artificial intelligenceUpstage’s AskUp offers Korea’s first GPT-4-powered chatbot service

Artificial intelligenceUpstage’s AskUp offers Korea’s first GPT-4-powered chatbot serviceMar 17, 2023 (Gmt+09:00)