Samsung to supply $752 million in Mach-1 AI chips to Naver, replace Nvidia

Samsung is also in talks with Big Tech firms such as Microsoft and Meta to supply its new AI accelerator

By Mar 22, 2024 (Gmt+09:00)

Alibaba eyes 1st investment in Korean e-commerce platform

Blackstone signs over $1 bn deal with MBK for 1st exit in Korea

NPS loses $1.2 bn in local stocks in Q1 on weak battery shares

OCI to invest up to $1.5 bn in MalaysiaŌĆÖs polysilicon plant

Korea's Lotte Insurance put on market for around $1.5 bn

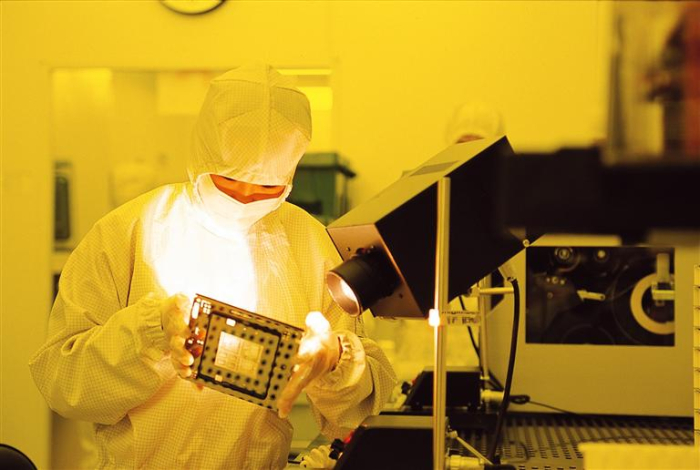

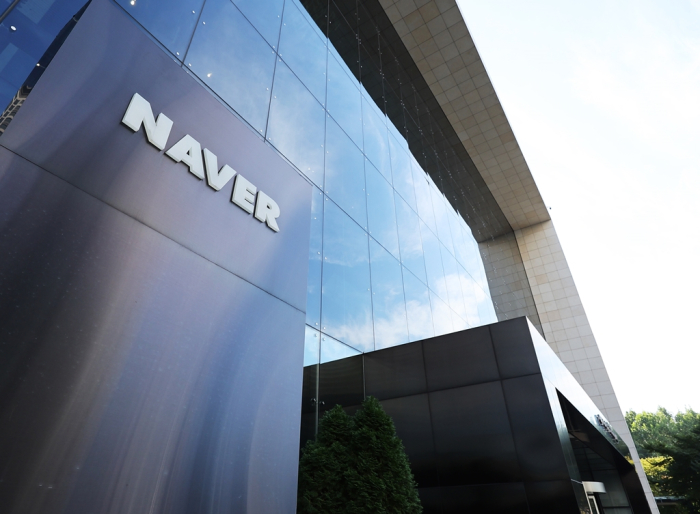

Samsung Electronics Co., the worldŌĆÖs top memory chipmaker, will supply its next-generation Mach-1 artificial intelligence chips to Naver Corp. by the end of this year in a deal worth up to 1 trillion won ($752 million).

With the contract, Naver will significantly reduce its reliance on Nvidia Corp. for AI chips.

SamsungŌĆÖs System LSI business division has agreed with Naver on the supply deal and the two companies are in final talks to fine-tune the exact volume and prices, people familiar with the matter said on Friday.

Samsung, South KoreaŌĆÖs tech giant, hopes to price the Mach-1 AI chip at around 5 million won ($3,756) apiece and Naver wants to receive between 150,000 and 200,000 units of the AI accelerator, sources said.

Naver, a leading Korean online platform giant, is expected to use Mach-1 chips in its servers for AI inference, replacing chips it has been procuring from Nvidia.

Leveraging its sale of Mach-1 chips to Naver as a stepping stone, Samsung plans to expand its client base to Big Tech firms. Samsung is already in supply talks with Microsoft Corp. and Meta Platforms Inc., sources said.

An accelerator is a special-purpose hardware device that uses multiple chips designed for data processing and computing.

MACH-1, COMPETITIVE IN PRICING, PERFORMANCE & EFFICIENCY

Kyung Kye-hyun, head of Samsung's semiconductor business, said during the companyŌĆÖs annual general meeting on Wednesday that the Mach-1 AI chip is under development and it will begin mass production of a prototype by year-end.

Mach-1 is an AI accelerator in the form of a system-on-chip (SoC) that reduces the bottleneck between the graphics processing unit (GPU) and high bandwidth memory (HBM) chips, according to Samsung.

Kyung said Mach-1 is a product specified to fit the transformer model.

ŌĆ£By using several algorithms, it can reduce the bottleneck phenomenon that occurs between memory and GPU chips to one-eighth of what we are witnessing today and improve the power efficiency by eight times,ŌĆØ he said. ŌĆ£It will enable large language model inference even with low-power memory instead of power-hungry HBM.ŌĆØ

Unlike Nvidia's AI accelerator, which consists of GPUs and HBM chips, Mach 1 combines SamsungŌĆÖs proprietary processors and low-power (LP) DRAM chips.

With that design, Mach-1 boasts fewer data bottlenecks, consuming less power than Nvidia products, industry sources said.

Besides, the price of the Mach-1 chip is one-tenth that of Nvidia's, they said.

TO WEAN ITSELF OFF NVIDIA┬Ā

Nvidia, the worldŌĆÖs largest chip design firm and AI chip provider, posted an operating profit margin of 62% in the November-January quarter. Some $18.8 billion, or 40% of its server business revenue, came from AI inference chip sales last year.

Sources said Naver will use SamsungŌĆÖs Mach-1 chips to power servers for its AI map service, Naver Place. Additional Mach-1 chip supply to Naver is possible if the first batch shows ŌĆ£good performance,ŌĆØ they said.

Naver has been reducing its reliance on Nvidia for AI chips.

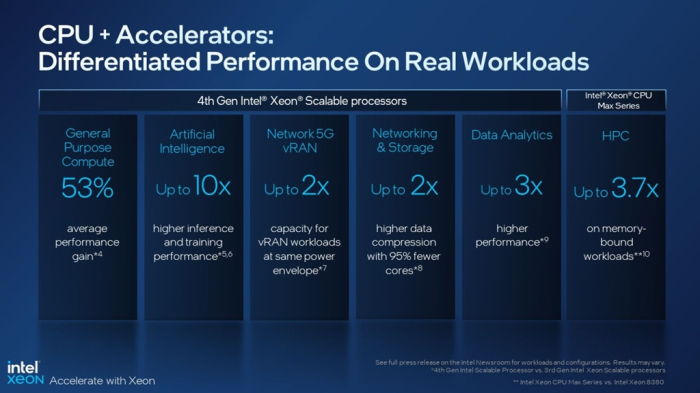

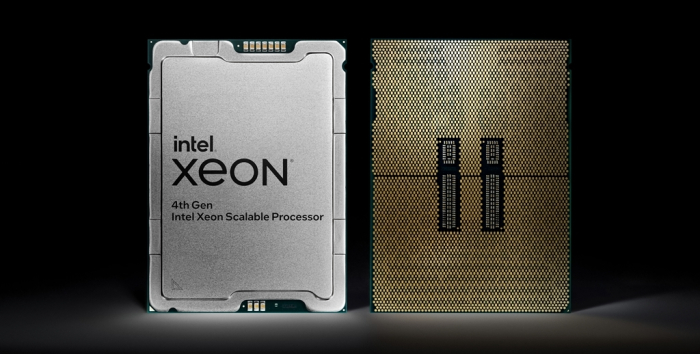

Last October, Naver replaced NvidiaŌĆÖs GPU-based server with Intel Corp.ŌĆÖs central processing unit (CPU)-based server.

NaverŌĆÖs AI server switch comes as global information technology firms are increasingly disgruntled with NvidiaŌĆÖs GPU price hikes and a global shortage of its GPUs.

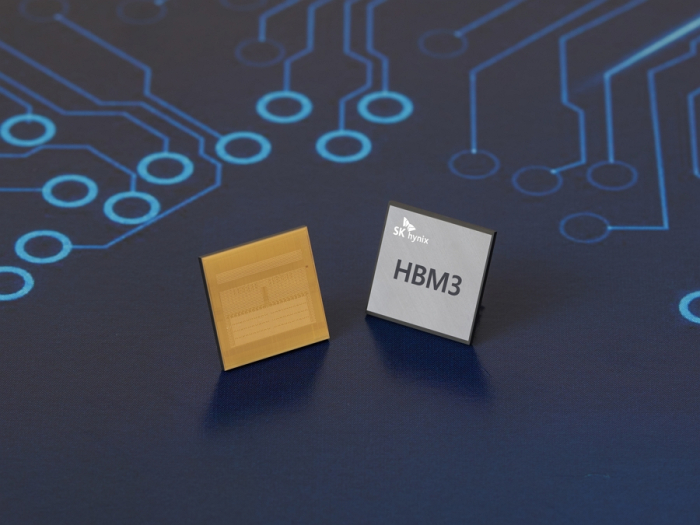

For Samsung, its deal with Naver would help it compete with crosstown rival SK Hynix Inc., the dominant player in the advanced HBM segment.

Kyung, chief executive of SamsungŌĆÖs Device Solutions (DS) division, which oversees its chip business, said with the Mach-1 chip Samsung aims to catch up to SK Hynix, which recently started┬Āmass production of its next-generation HBM chip.

HBM has become an essential part of the AI boom, as it provides the much-needed faster processing speed compared with traditional memory chips.

A laggard in the HBM chip segment, Samsung has been investing heavily in HBM to rival SK Hynix and other memory players.

Last month, Samsung said it developed HBM3E 12H, the industry's first 12-stack HBM3E DRAM and the highest-capacity HBM product to date. Samsung said that it will start mass production of the chip in the first half of this year.

According to market research firm Omdia, the global inference AI accelerator market is forecast to grow from $6 billion in 2023 to $143 billion by 2030.

Write to Jeong-Soo Hwang, Chae-Yeon Kim and Eui-Myung Park at hjs@hankyung.com

In-Soo Nam edited this article.

-

Korean chipmakersSamsung to unveil Mach-1 AI chip to upend SK HynixŌĆÖs HBM leadership

Korean chipmakersSamsung to unveil Mach-1 AI chip to upend SK HynixŌĆÖs HBM leadershipMar 20, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung rallies on expectations of NvidiaŌĆÖs HBM order

Korean chipmakersSamsung rallies on expectations of NvidiaŌĆÖs HBM orderMar 20, 2024 (Gmt+09:00)

3 Min read -

Korean innovators at CES 2024Samsung to double HBM chip production to lead on-device AI chip era

Korean innovators at CES 2024Samsung to double HBM chip production to lead on-device AI chip eraJan 12, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSK Hynix bets on DRAM upturn with $7.6 bn spending; HBM in focus

Korean chipmakersSK Hynix bets on DRAM upturn with $7.6 bn spending; HBM in focusNov 09, 2023 (Gmt+09:00)

4 Min read -

Tech, Media & TelecomNaver replaces Nvidia GPU with Intel CPU for its AI map app server

Tech, Media & TelecomNaver replaces Nvidia GPU with Intel CPU for its AI map app serverOct 30, 2023 (Gmt+09:00)

2 Min read -

Korean chipmakersSamsung set to supply HBM3 to Nvidia, develops 32 Gb DDR5 chip

Korean chipmakersSamsung set to supply HBM3 to Nvidia, develops 32 Gb DDR5 chipSep 01, 2023 (Gmt+09:00)

4 Min read -

Korean stock marketFunds with high exposure to Nvidia, Samsung yield higher returns

Korean stock marketFunds with high exposure to Nvidia, Samsung yield higher returnsMay 31, 2023 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung to make 3 nm chips for Nvidia, Qualcomm, IBM, Baidu

Korean chipmakersSamsung to make 3 nm chips for Nvidia, Qualcomm, IBM, BaiduNov 22, 2022 (Gmt+09:00)

3 Min read -

AutomobilesHyundai Motor to install NvidiaŌĆÖs in-vehicle system in entire fleet from 2022

AutomobilesHyundai Motor to install NvidiaŌĆÖs in-vehicle system in entire fleet from 2022Nov 10, 2020 (Gmt+09:00)

1 Min read